The Spectrum of Agentic AI: From Basic Prompts to Elite Specialized Teams

I wasted $47,000 on AI before building a system that actually makes money.

Most companies use AI like a $20/month ChatGPT subscription. We built a 33-agent system that does the work of 8 full-time employees, saving around $480,000 annually while producing better output than when we had humans doing it.

The difference between renting AI and owning AI infrastructure is the difference between spending money and making money. If you’re still opening ChatGPT for every task, you’re hemorrhaging money in wasted productivity while your competitors build systems that compound.

I spent six months and $47,000 in failed experiments to learn this. This article could save you both and show you how to build infrastructure that pays for itself in 90 days.

Six months later, we have 33 specialized agents organized into seven departments, handling everything from design reviews to QA testing to content operations. And I wish I could tell you we planned it brilliantly from the start. We didn’t. We made basically every mistake you can make when building agentic systems, and then we had to unwind them one by one.

This article is about the spectrum I discovered along the way. Five distinct levels of AI maturity, from “ChatGPT for everything” to “holy crap we built production infrastructure.” By the end, you’ll know exactly where you sit and what it actually takes to move up.

Here’s the part nobody tells you: the difference between Level 1 and Level 5 isn’t incremental improvements. It’s the difference between a calculator and an operating system. Between a tool you rent and infrastructure you own.

Level 1: The solitary AI user, starting fresh with every task

Level 1 - The ChatGPT Window (Where Everyone Starts)

Let me paint you a picture of Level 1, because I lived here for months and convinced myself I was being productive.

You open ChatGPT. You type something like “Write a blog post about AI agents.” You get 600 words of perfectly adequate, completely generic content. You spend 20 minutes editing it to sound like your actual voice and fix the parts where it clearly has no idea what it’s talking about. Tomorrow, you do it again. And again. And again.

The appeal is obvious. It’s fast, it’s convenient, and it requires zero setup. No process thinking, no infrastructure planning, just you and a chat window. Marketing teams operate this way constantly. One person asks for social posts, another requests email copy, a third generates ad headlines. Each request is isolated. Each output is disconnected. There’s no memory, no consistency, no learning.

Here’s what makes it insidious: it works. Sort of. It’s definitely faster than writing from scratch. But you’re still the bottleneck. You’re still making every decision, catching every mistake, fixing every hallucination. The AI isn’t an agent—it’s a very sophisticated autocomplete that forgets everything the moment you close the window.

I remember spending an entire Tuesday in April retyping basically the same instructions to ChatGPT for different content pieces. “Make it friendly but professional. Target SaaS founders. Include a CTA. Keep it under 500 words.” And then doing it again an hour later because the context window had reset and it had forgotten our brand voice entirely.

The technical problem is actually pretty straightforward: you’re retraining the AI from scratch every single time. There’s no version control for your prompts, no consistency across outputs, no way to capture what works and iterate on it. You’re paying subscription fees for a tool that you have to completely re-teach every time you use it.

Here’s how you know you’re stuck at Level 1: You start fresh ChatGPT conversations for each task. You retype similar instructions multiple times per week. The quality varies wildly. And you spend 15-30 minutes editing every single output because you can’t quite trust it.

The truth is brutal. If you’re at Level 1, you’re renting AI’s brain for 30-second intervals. There’s no compounding value. No learning. No improvement over time.

So what’s the upgrade path? You realize that retyping instructions is insane, and you start building templates.

Level 2: Building reusable templates for consistency

Level 2 - Templates and Custom GPTs (Consistency Without Depth)

By May, I’d gotten smart. Or so I thought.

I built templates for everything. A “Blog Post Generator” that knew our brand voice, target audience, and structural preferences. A “Social Media Assistant” with pre-loaded tone guidelines. An “Email Campaign Writer” that output consistent formats. I even set up custom GPTs with detailed instructions and example outputs.

This was a real upgrade. Now the AI remembered context. We weren’t starting from zero every time. Our marketing person could generate 10 LinkedIn posts in the time it used to take to write one. The outputs were consistent, the brand voice was recognizable, the structure was repeatable.

I thought we’d made it. We hadn’t.

Here’s the limitation I didn’t see coming: templates give you consistency, but they don’t give you depth. Our “Blog Post Generator” produced the same structure whether we were writing about feature announcements or thought leadership. Our “Social Media Assistant” used the same tone whether we were engaging with developers or executives. The template didn’t know the difference because I didn’t teach it to care.

We’d industrialized mediocrity. Every output was 70% good. Consistent 70%, repeatable 70%, scalable 70%. But we couldn’t break through to excellence because the template didn’t have expertise. It had instructions.

I noticed this most clearly when we tried to use our SEO template for technical content. It would insert keywords in all the right places, follow the structural guidelines perfectly, and produce something that was technically optimized but completely missed the actual search intent. It was following rules without understanding why the rules existed.

The template couldn’t tell the difference between keyword stuffing and semantic relevance because it didn’t actually understand SEO. It just had a checklist.

That’s when I realized we didn’t need better templates. We needed specialists.

Level 3: Specialized agents with deep expertise working in isolation

Level 3 - Specialized Agents (Depth Without Collaboration)

June was when things got interesting. And by interesting, I mean “we accidentally created 12 specialists who couldn’t talk to each other.”

Instead of one “Blog Post Generator,” we built six specialists: an SEO Strategist, a Hook Architect, a Brand Voice Guardian, a CTA Specialist, a Technical Accuracy Reviewer, and a Visual Storyteller. Each agent had deep expertise in one domain. Each agent knew why its role mattered and how to evaluate quality.

The outputs improved immediately. Our SEO Strategist didn’t just insert keywords—it analyzed search intent, evaluated keyword difficulty, and structured content for featured snippets. Our Hook Architect didn’t just write openings—it applied psychological frameworks and knew which archetype worked for which audience.

For the first time, we were getting outputs that felt like they came from actual domain experts, not generic AI assistants.

Here’s what I didn’t anticipate: coordination chaos.

The SEO Strategist would optimize for search, but the Brand Voice Guardian would hate the keyword-stuffed headline. The Hook Architect would write a contrarian opening, but the CTA Specialist would say it didn’t align with the conversion goal. Everyone was doing excellent work in conflicting directions.

I spent three weeks in July just managing handoffs. The SEO analysis would finish, I’d manually pass the insights to the Content Creator, they’d generate a draft, I’d send it to the Brand Guardian for review, they’d send it back with feedback, and somewhere in that process we’d lose 30% of the original SEO recommendations because nobody had a standardized format for passing context.

We’d created expertise silos. Powerful in isolation, but real work requires collaboration. And we had none.

I remember one particularly frustrating day where our UX Researcher identified that our onboarding flow was confusing new users, our UI Designer created a beautiful solution that completely ignored the research findings, and our Frontend Developer implemented something that worked technically but missed both the UX insights and the design intent. Three specialists, zero collaboration.

That’s when I realized we didn’t just need specialists. We needed teams.

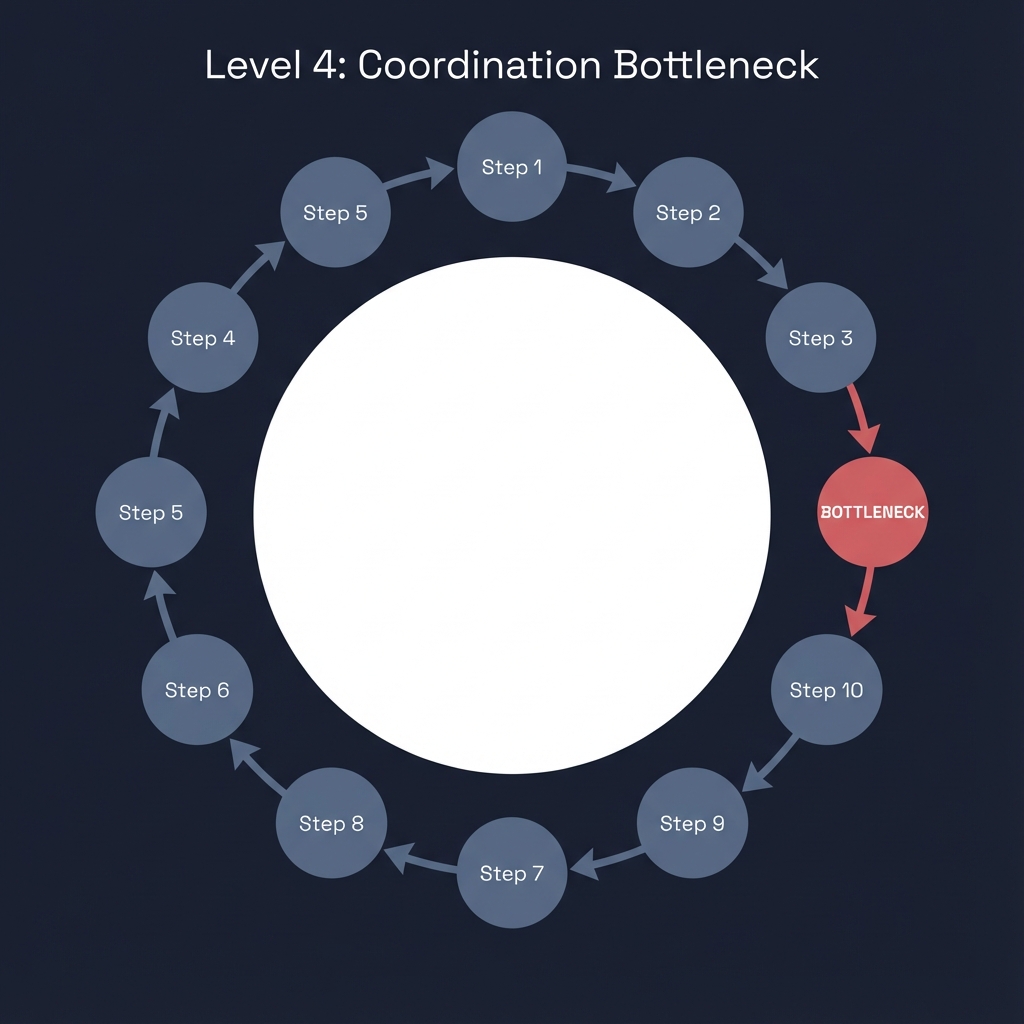

Level 4: Teams collaborating but struggling with coordination chaos

Level 4 - Team-Based Agents (Collaboration Without Structure)

August was chaos. Productive chaos, but chaos nonetheless.

We started running multiple agents on the same project. A content workflow might involve 12 agents - a Research Team, a Creation Team, and an Optimization Team. The output quality jumped. We were hitting 85%, sometimes 90%. But coordination became its own full-time job.

Here’s what nobody tells you about multi-agent systems: parallel execution is amazing until everyone finishes at different times and you realize you don’t have a standardized way to merge their outputs.

The Research Team would hand off insights to the Creation Team, but the format wasn’t standardized. The Hook Architect would write an opening based on one psychological driver, but the Audience Psychologist had identified a different primary motivation. The SEO Specialist would optimize the headline, but the Brand Guardian would say it was off-voice.

Everyone was doing excellent work. In conflicting directions. With no system to resolve disagreements or prioritize trade-offs.

Do you optimize for SEO ranking or brand consistency? Do you prioritize emotional resonance or conversion metrics? Every project became a negotiation between agents who each thought their domain was most important.

I spent one entire week in September just trying to define a workflow for feature development that wouldn’t create bottlenecks. The UI Designer needed to finish before the Frontend Developer could start. The Backend Architect couldn’t begin until we had API specs. The QA Engineer was sitting idle until everyone else delivered. If one agent failed, the whole workflow stalled.

We were amplifying output, but we were also amplifying complexity. We had teams, but no orchestration. Handoffs were unclear. Quality criteria conflicted. There was no system.

That’s when I realized what we were missing: structure. Not just better processes, but actual architecture.

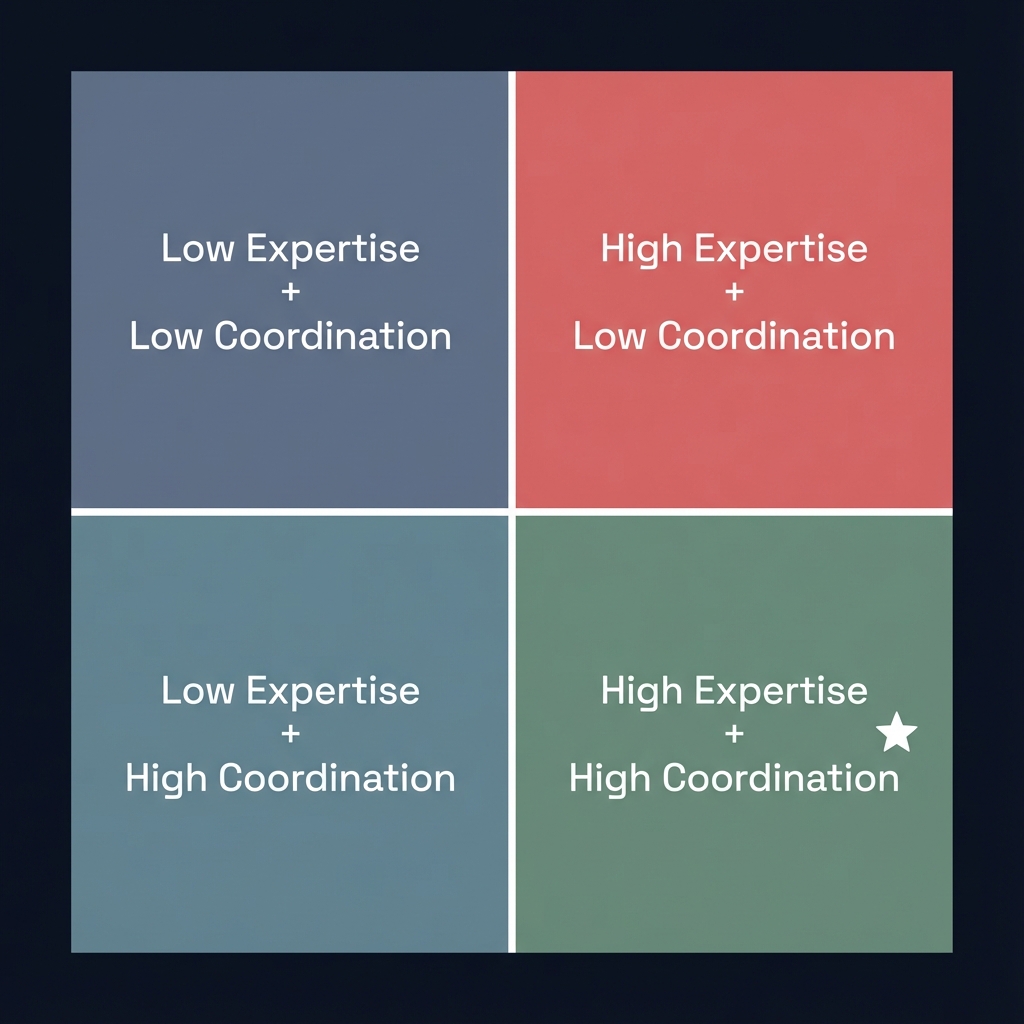

Level 5: Elite orchestration - 33 specialists working in harmony

Level 5 - Elite Orchestration (What Actually Works)

September through November was when we finally stopped building features and started building infrastructure.

Here’s what we have now: 33 specialized agents organized into 7 departments. Not ad-hoc teams. Departments with clear mandates, documented workflows, and quality gates at every handoff.

Engineering has 6 agents. Design has 5. Marketing has 6. Product has 3. Testing has 3. Project Management has 2. Studio Operations has 2. Writing has 5. Plus 8 content-creation specialists for viral content optimization.

But here’s what actually matters: every single agent is defined by a production-grade specification with five critical components that took us three months to get right.

First - Input/Output Validation

Every agent declares what it expects to receive and what it will deliver. The SEO Content Strategist requires a target keyword, search intent analysis, and competitive landscape. It outputs keyword clusters, content structure recommendations, and internal linking strategy. If inputs are missing, the agent flags it before starting work.

No more “I didn’t have enough context” excuses. No more guessing what format the next agent needs.

Second - Quality Criteria

Every agent has measurable quality thresholds. The Blog Content Writer’s output is scored on narrative flow (25%), technical accuracy (20%), brand alignment (20%), psychological resonance (15%), SEO optimization (10%), and CTA effectiveness (10%). A score below 92% triggers a self-critique loop.

The agent doesn’t just fail… it explains why and proposes fixes.

Third - Self-Critique Prompts

After generating output, every agent runs a self-evaluation: “Does this hook use a recognized psychological archetype?” “Does the CTA align with the primary motivation identified in audience research?” “Are there unexplained jargon terms?”

Quality control happens before human review, not after.

Fourth - Edge Case Documentation

Every agent documents known failure scenarios. The Twitter/X Specialist knows it struggles with highly technical audiences because engineers prefer depth over snark. The Hook Architect knows contrarian openings fail for risk-averse enterprise buyers.

When an edge case is detected, the agent warns you: “This content targets CTOs—contrarian hooks may backfire. Consider Question archetype instead.”

Fifth - Real Examples

Every agent ships with 5-10 examples of excellent work. The Blog Content Writer references actual published articles with 10,000+ views. The CTA Architect shows conversion data from real campaigns. Agents don’t work from theory—they pattern-match against proven success.

Here’s what this actually looks like in practice. When we build a feature now, the UI Designer delivers a Figma file with component specs, the Backend Architect provides API documentation with endpoints, and the QA Engineer signs off on the test plan before deployment. Every handoff has a format. Every quality check has criteria. Every failure has documentation.

We didn’t build this overnight. We started at Level 3 in March, hit Level 4 chaos by June, and spent three months architecting Level 5 by September. The breakthrough wasn’t adding more agents—it was adding structure.

What I Wish I’d Known Six Months Ago

Here’s the honest truth about building agentic systems: you can’t skip levels.

I tried. In May, I thought we could jump straight from templates to full orchestration. We couldn’t. Each level teaches you what the next level requires. Templates teach you that consistency isn’t enough. Specialists teach you that expertise without collaboration creates silos. Teams teach you that collaboration without structure creates chaos.

The upgrade path that actually worked for us:

Months 1-2: Built 6 specialist agents. Learned that specialization matters.

Months 3-4: Enabled parallel execution. Discovered coordination chaos.

Months 5-7: Implemented quality schemas and orchestration frameworks. Finally got infrastructure that compounds.

If you’re opening ChatGPT and retyping instructions, you’re at Level 1. If you have Custom GPTs but the same quality outputs, you’re at Level 2. If you have specialists but they don’t collaborate, you’re at Level 3. If you have teams but coordination is chaos, you’re at Level 4.

Be honest about where you actually are. Not where you want to be. Not where your LinkedIn bio says you are. Where you actually operate day-to-day.

The Real Question

Most people use AI like a hammer. One tool, one function, one point of impact. You swing it when you need it. You put it down when you’re done. There’s no memory, no collaboration, no compounding value.

Elite systems use AI like a symphony. Thirty-three instruments, each with specialized expertise, each playing a specific part, all coordinated by a score that defines when each section enters, how loud they play, and how they harmonize with others.

The output isn’t just louder than one instrument—it’s qualitatively different. It’s music, not noise.

Here’s what I’ve learned after six months and way too many mistakes: the question isn’t whether agentic AI works. It’s whether you’re willing to build systems instead of running prompts.

We built 33 agents at Jottly because we were tired of 70% quality. We wanted 97%. We got there by treating AI like an operating system, not a chatbot. By accepting that you can’t skip levels, that each stage teaches you what the next one requires, and that infrastructure compounds while tools depreciate.

You can do the same. But it requires letting go of the hammer and learning to conduct. Start with one department. Build the infrastructure: YAML schemas, input/output validation, quality criteria, self-critique prompts. Get three agents working flawlessly. Then add three more. Then connect departments.

Don’t try to jump from Level 1 to Level 5. You’ll build a 33-agent mess with no coordination and no quality control. Move methodically. One level at a time. One specialist at a time. One department at a time.

The future isn’t about using AI better. It’s about building AI systems that compound.

The difference between AI users and AI orchestrators is the difference between owning a hammer and conducting a symphony. One is a tool you use. The other is infrastructure that compounds.

Want to see how this actually works in practice?

This article was created using Jottly’s Level 5 orchestration system. Eight specialists worked on it - Hook Architect, Psychology Decoder, Story Weaver, Visual Strategist, Blog Writer, Technical Writer, Content Editor, and Brand Guardian. Eight specialists. One cohesive output. That’s what orchestration looks like.

If you’re building your own agentic system and want to compare notes, I’m documenting the whole journey. Subscribe below for the mistakes, the breakthroughs, and the architecture that actually worked.

📧 Subscribe to the Newsletter | 🐦 Follow on Twitter/X | 💼 Connect on LinkedIn

Continue Reading:

- Foundations of a PM - Building systems that scale

- America’s Debt Crisis: The Financial Abyss - Understanding systemic complexity

- The Crypto Voter: A New Political Demographic in 2024 - Emerging technology adoption patterns